HashMap is one of the most high performing class in java collection framework. HashMap almost gives constant time performance for any size of data for frequent operations – insert and retrieve. It is very popular data structure and found useful for solving many problems due to O(1) time complexity for both insert and retrieve operation. This is the main reason why HashMap is considered for the heavy sized data and it will still provide faster insertion and retrieval operations.

There are mainly two factors which affect the performance of HashMap.

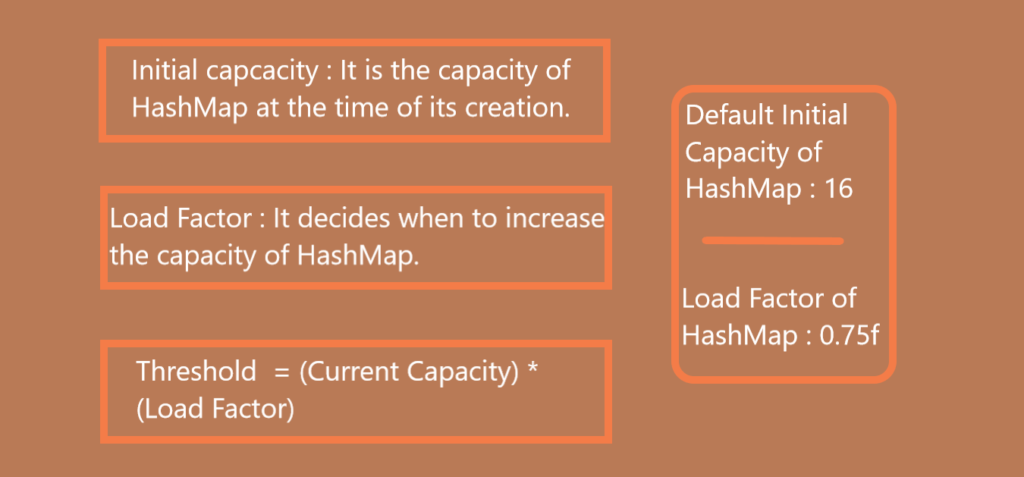

- load factor

- initial capacity

These two factors plays important role in performance of HashMap so these should be chosen very carefully while constructing an HashMap object.

Before proceeding further, It is important to understand the HashMap in depth. So please go through the below link :

HashMap and its internal working in Java complete tutorial.

HashMap Initial Capacity

As we know, HashMap uses hash table as its underlying data structure. The capacity of an HashMap is the number of buckets present in the hash table. The initial capacity is the capacity of an HashMap at the time of its creation.

The default initial capacity of the HashMap is 24 i.e 16.

The capacity of the HashMap is doubled each time as it reaches the threshold. i.e the capacity is increased to 25=32, 26=64, 27=128….. when the threshold is reached.

HashMap Load Factor

Load Factor is a measure which decides when to increase the hashmap capacity i.e buckets to maintain insert and retrieve operation complexity as O(1).

When the total number of items in hashmap goes on increasing keeping the default initial capacity of hashmap 16, At one point of time, hashmap performance will start degrading and need to increase buckets for improving performance.

The default load factor of HashMap is 0.75f.

HashMap Threshold Calculation

The threshold of an HashMap is calculated with the product of current capacity and load factor.

Threshold = (Current Capacity) * (Load Factor)

For example, if HashMap object is created with initial capacity of 16 then :

Threshold = 16 * 0.75 = 12

As we know, HashMap Load Factor is 0.75.

That means, the capacity of the HashMap is increased from 16 to 32 after the 12th element (key-value pair) is added into the HashMap object.

How Initial Capacity And Load Factor plays important role in Performance Of HashMap

Internally, Rehashing is done whenever HashMap reaches its threshold.

Rehashing is the procedure where a new HashMap object with a new capacity is created and all the old elements are placed into new object after re-calculating their hashcodes.

The process of re-hashing is costly as it is both time and space consuming so it affects the HashMap performance.

To maintain the performance, the initial capacity should be chosen very carefully. Initial capacity should be decided by keeping number of expected elements in mind. We should ensure that rehashing does not occur frequently.

You have to be very careful while choosing the load factor of HashMap too. According to Oracle doc, the default load factor of 0.75f always gives best performance in terms of both time and space.

For example :

If you choose HashMap load factor as 1.0f.

Threshold = 16 * 1.0 = 16;

It means, rehashing takes place after filling 100% of the current capacity.

This may save the space but it will increase the retrieval time of existing elements.

Suppose if you choose load factor as 0.5f, then rehashing takes place after filling 50% of the current capacity. This will increase the number of rehashing operations. This will further degrade the performance of HashMap in terms of both time and space.

So, you have to be very careful while choosing the initial capacity and load factor of an HashMap object. Choose the initial capacity and load factor such that they minimize the number of rehashing operations.

Conclusion

Congratulations Readers! In this article you have learnt the Load Factor of HashMap, Initial Capacity of HashMap, How these are important for performance of HashMap and What is the Rehashing process.

As a general rule, the default load factor (.75) offers a good tradeoff between time and space costs. Higher values decrease the space overhead but increase the lookup cost (reflected in most of the operations of the HashMap class, including get and put). The expected number of entries in the map and its load factor should be taken into account when setting its initial capacity, so as to minimize the number of rehash operations. If the initial capacity is greater than the maximum number of entries divided by the load factor, no rehash operations will ever occur.

Yes right..Thanks