Overview

In this article, We will understand the LRU cache java in detail. We will learn about the LRU Cache , Its features and How to implement LRU Cache in Java with Example.

Let’s get started :

What is LRU Cache

Least Recently Used Cache is a cache abbreviated as LRU Cache. It evicts the least recently used entry. LRU Cache size is fixed in nature.

This cache supports the get(key) and put(key,value) operations to retrieve and insert respectively.

In case of cache is full, the operation put() first removes the entry which is least recently used then it will insert a new entry.

As we all know, the first and most important purpose for any cache is providing faster and effective way for retrieval of data. So it needs some requirements to be met to achieve faster and efficient way, Those requirements are :

- As the purpose of cache is to provide faster operations, it is required that cache insert and retrieve operations should be fast , preferably O(1) time.

- When we are talking about fast access of data then it is necessary to have some limit in the size/memory of the cache. So Fixed Size of cache is one of the important requirement.

- In case of memory full of cache, it should have functionality to remove the entry before inserting the new one.

How to implement LRU Cache in Java

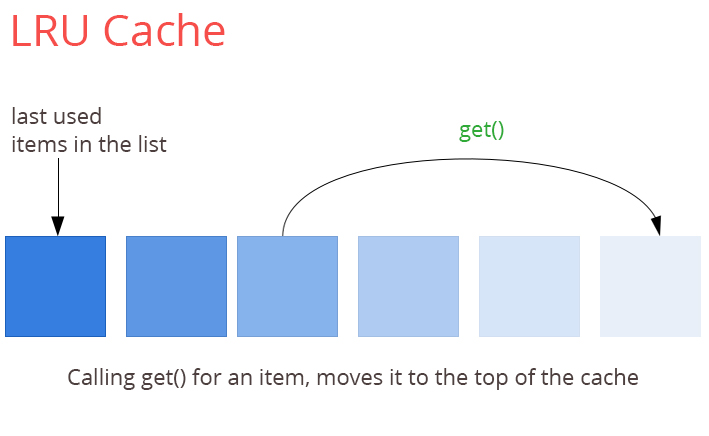

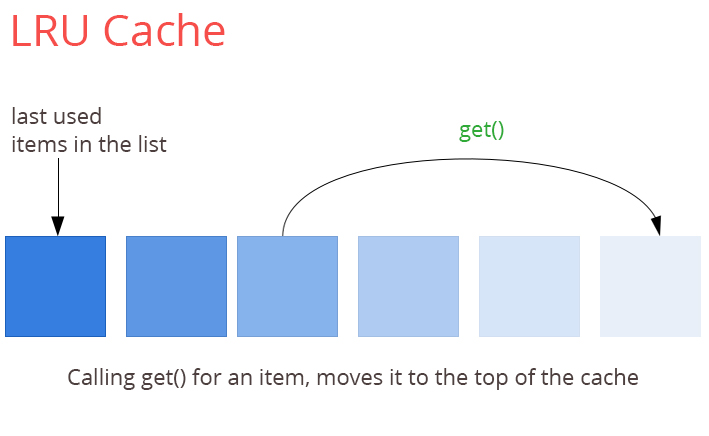

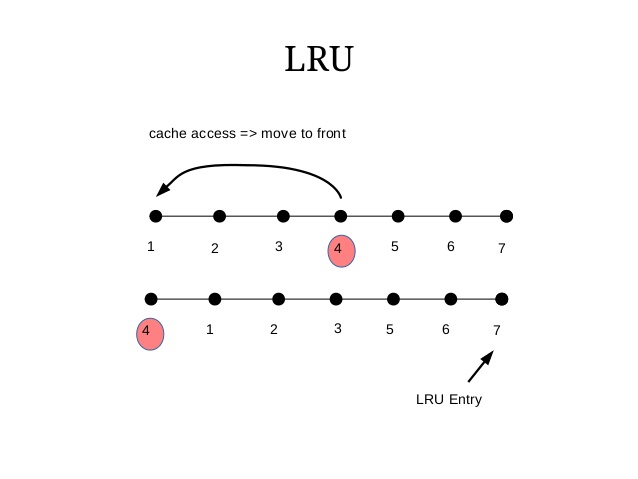

As we learnt, The LRU cache evicts the least recently used item first so we are required to :

- Track the recently used entries.

- Track the entries which are not used since long time.

- Additionally, we need to make the insertion, retrieval operations fast.

LRU Cache in Java can be implemented using data structures HashMap and doubly linked list. As they fulfills the requirements mentioned above , See How :

As we want the faster retrieval operation say taking only O(1) time, HashMap is best. HashMap provides the O(1) for lookup and insertion as well.

So HashMap solves the problem of faster operations.

However, HashMap does not provide functionality to track the recently used entries. So we need another data structure which can track the entries and still provide faster operations.

The data structure to fulfill this requirement in Doubly Linked List as it has nodes which contains address and Doubly Linked List also takes O(1) for insertion, deletion and updation provided address of node is available.

So By combining HashMap and Doubly Linked List, we will implement our LRU Cache in Java.

Doubly Linked List will hold the values of the key and HashMap will hold the addresses and keys of the nodes of the list.

LRU Cache implementation in Java Example

In the below example, We have :

- Node Class.

- LRUCache class.

We will follow the below approach in our example :

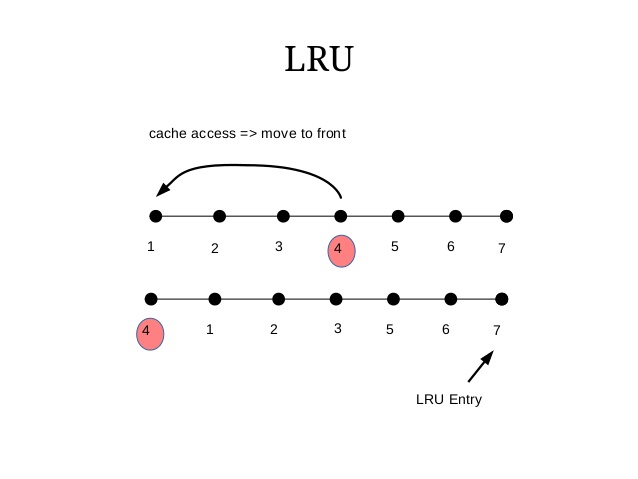

- We will always remove the item / element from bottom/right of the list and will add element on start of the list.

- As entry is accessed , the entry will move to the top/left-most.

- This way, Recently used entries will be on top/left and least recently used will be on bottom/right of the list.

Let’s understand the example :

import java.util.HashMap;

import java.util.Map;

//The Node class for doubly linked list

class Node<K, V> {

K key;

V value;

Node<K, V> next;

Node<K, V> prev;

public Node(Node<K, V> prev, Node<K, V> next, K key, V value) {

this.prev = prev;

this.next = next;

this.key = key;

this.value = value;

}

}

//The class for LRU Cache storage and its operations

public class {

// Variable to store the least recently used element

private Node<K, V> lruElement;

// Variable to store the most recently used element

private Node<K, V> mruElement;

private Map<K, Node<K, V>> container;

private int capacity;

private int currentSize;

// Constructor for setting the values in instance variables

public LRUCache(int capacity) {

this.capacity = capacity;

this.currentSize = 0;

lruElement = new Node<K, V>(null, null, null, null);

mruElement = lruElement;

container = new HashMap<K, Node<K, V>>();

}

// The get method to perform the retrieve operations on data

public V get(K key) {

Node<K, V> tempNode = container.get(key);

if (tempNode == null) {

return null;

}

// In case the MRU leave the list as it is :

else if (tempNode.key == mruElement.key) {

return mruElement.value;

}

// Getting the Next and Previous Nodes

Node<K, V> nextNode = tempNode.next;

Node<K, V> prevNode = tempNode.prev;

// If LRU is updated at the left-most

if (tempNode.key == lruElement.key) {

nextNode.prev = null;

lruElement = nextNode;

}

// In case we are in the middle, we are required to update the items before and

// after our item

else if (tempNode.key != mruElement.key) {

prevNode.next = nextNode;

nextNode.prev = prevNode;

}

// And here we are finally moving our item to MRU

tempNode.prev = mruElement;

mruElement.next = tempNode;

mruElement = tempNode;

mruElement.next = null;

return tempNode.value;

}

// The put method to perform the insert operations on cache

public void put(K key, V value) {

if (container.containsKey(key)) {

return;

}

// Inserting the new Node at the right-most end position of the linked-list

Node<K, V> myNode = new Node<K, V>(mruElement, null, key, value);

mruElement.next = myNode;

container.put(key, myNode);

mruElement = myNode;

// Deleting the entry of position left-most of LRU cache and also updating the

// LRU pointer

if (currentSize == capacity) {

container.remove(lruElement.key);

lruElement = lruElement.next;

lruElement.prev = null;

}

// Updating the size of container for the firstly added entry and updating the

// LRU pointer

else if (currentSize < capacity) {

if (currentSize == 0) {

lruElement = myNode;

}

currentSize++;

}

}

}

In the above example :

- The Node class is basically used for implementing the Doubly Linked List data structure. It has the previous reference, next reference, key and the value.

- Whenever, we are getting any value by using the get() method of the LRUCache class, the entry or element is moved to the right-most position of the list so that we can track the Most recently used element (MRU Element).

- Whenever, we are inserting any element in the cache using put()method, It gets added at right-most position of the list.

- If the memory is full , The least recently used element in deleted from the list.

Testing the LRU Cache Java

Now, In this segment we will test the LRU cache java using JUnit.

import static org.junit.jupiter.api.Assertions.assertEquals;

import org.junit.jupiter.api.Test;

public class LRUCacheTest {

private LRUCache<Integer, Integer> c;

public LRUCacheTest() {

this.c = new LRUCache<>(2);

}

@Test

public void testCacheStartsEmpty() {

assertEquals(c.get(1), null);

}

@Test

public void testSetBelowCapacity() {

c.put(1, 1);

assertEquals(c.get(1), 1);

assertEquals(c.get(2), null);

c.put(2, 4);

assertEquals(c.get(1), 1);

assertEquals(c.get(2), 4);

}

@Test

public void testCapacityReachedOldestRemoved() {

c.put(1, 1);

c.put(2, 4);

c.put(3, 9);

assertEquals(c.get(1), null);

assertEquals(c.get(2), 4);

assertEquals(c.get(3), 9);

}

@Test

public void testGetRenewsEntry() {

c.put(1, 1);

c.put(2, 4);

assertEquals(c.get(1), 1);

c.put(3, 9);

assertEquals(c.get(1), 1);

assertEquals(c.get(2), null);

assertEquals(c.get(3), 9);

}

}

Conclusion

You have learned LRU Cache Java, Its features, Its implementation in Java with Example, Testing of LRU Cache using JUnit.